Overview

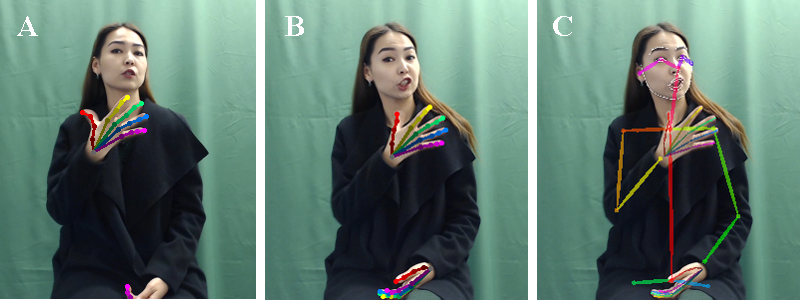

The paper presents the results of the ongoing work, which aims to recognize sign language in real time. The motivation behind this work lies in the need to differentiate between similar signs that differ in non-manual components present in any sign. To this end, we recorded 5200 videos of twenty frequently used signs in Kazakh-Russian Sign Language (K-RSL), which have similar manual components but differ in non-manual components (i.e. facial expressions, eyebrow height, mouth, and head orientation). We conducted a series of evaluations in order to investigate whether non-manual components would improve sign's recognition accuracy. Among standard machine learning approaches, Logistic Regression produced the best results, 77% of accuracy for dataset with 20 signs and 77.3% of accuracy for dataset with 2 classes (statement vs question).

Sign Language used in Kazakhstan is closely related to Russian Sign Language (RSL) like many other sign languages within Commonwealth of Independent States (CIS). The closest corpus within CIS area is the Novosibirsk State University of Technology RSL Corpus. However it has been created as a linguistic corpus for studying previously unexplored fragments of RSL, thus it is inappropriate for machine learning. The creation of the first K-RSL corpus will change the situation, and it can be used within CIS and beyond.

Given the important role of non-manual markers, in this paper we test whether including non-manual features improves recognition accuracy of signs. We focus on a specific case where two types of non-manual markers play a role, namely question signs in K-RSL. Similar to question words in many spoken languages, question signs in K-RSL can be used not only in questions Who came? but also in statements I know who came. Thus, each question sign can occur either with non-manual question marking (eyebrow raise, sideward or backward head tilt), or without it. In addition, question signs are usually accompanied by mouthing of the corresponding Russian/Kazakh word (e.g. kto/kim for "who", and chto/ne for "what"). While question signs are also distinguished from each other by manual features, mouthing provides extra information, which can be used in recognition. Thus, the two types of non-manual markers (eyebrow and head position vs. mouthing) can play a different role in recognition: the former can be used to distinguish statements from questions, and the latter can be used to help distinguish different question signs from each other. To this end, we hypothesize that addition of non-manual markers will improve recognition accuracy.

K-RSL Project

K-RSL Project